DIY City 0.01a R&D collaboration - Specialmoves

DIY City 0.01a:

R&D collaboration

Specialmoves Labs

DIY City 0.01a is an R&D collaboration between Specialmoves and Haque Design+Research. Our vision was to create an interactive projection allowing people to reconfigure their city. We agreed a sprint of 10 days of quick, iterative and hopefully very creative work. And that on the 10th day, we'd share the results with friends and allies in our courtyard.

I worked very closely with Rik Leigh, Head of .Net (yes - the entire internet) here at Specialmoves, and Usman and Dot from HD+R. Rik and I worked in an XP fashion iterating rapidly, pair programming and solving problems quickly.

We found this had benefits for hitting the tight deadline.

Day 1

At 10am on day 1, we started with a simple premise that users could upload images onto surface projections. Quickly we decided on something more ambitious: to allow people to create and display their own creations. So, coupled with a pixel art aesthetic, we decided to allow people to create images with an 8x8 bitmap editor, position their images live on a wall and add 2D physical simulations with interesting behaviours to boot.

With 9.5 days to go, we didn’t have time for stories, project plans and the like. Rik and I set up a whiteboard and with markers at the ready we planned the architecture for the app that day. Discussing and solving the major challenges as we went such as; how to update the projectors live from peoples' phones, how to keep the projectors updated at the same rate, how to manage queuing seamlessly, along with other aspect such as working out the physics simulation.

Day 2

24 hours later we had a working prototype to start hacking.

Isn’t life great without project managers?

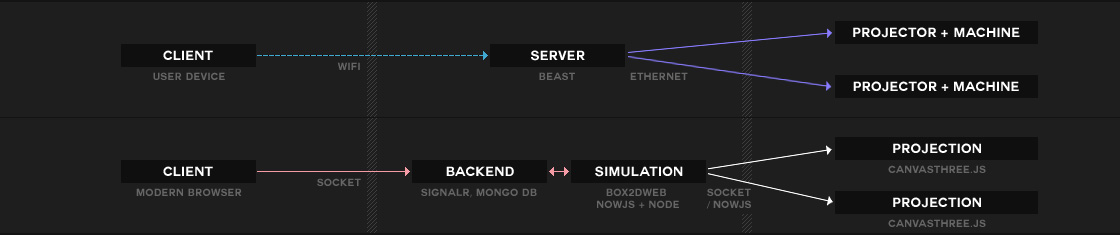

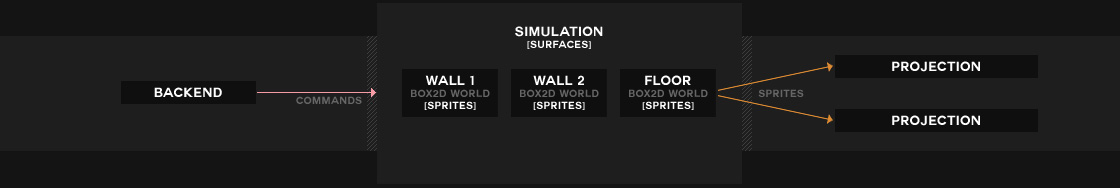

Here's the basic architecture we came up with in that intial whiteboard session:

- Client (multiple): the user can draw a sprite in 12x12 pixels, position it

on a surface - Server: handling clients, queuing if things get busy, saving sprites and sending them to the sim

- Simulation: handling positioning of sprites, physical behaviours, lifespan, sending data to projectors

- Projectors (multiple): adding sprites, positioning them with data from simulation, 3D rotation and configuration to align with BG.

Surprisingly this is exactly how the app worked in reality. We split the work as we normally would separating the ‘backend’ and ‘frontend’.

Rik worked on the backend using SignalR and jQuery mobile to allow people to draw and position their sprites on the walls in real time. And I worked on the frontend simulation and projection, which is roughly organised like this:

- Simulation:

- Receives commands from the simulation (configure, addSprite, Move, Activate) with a node socket server

- Adds them to surfaces and box2d worlds if they have a behaviour

- Sends sprites and positions to projectors via nowjs

- Projectors:

- Take sprite data and positions and draw the surface to be projected onto a 3D plane

Simulation and worlds

Each user-created sprite is one of two types. Either it's ‘painted’ onto the background (boring). Or it has a behaviour attached and lives inside a Box2D world. The behaviours varied from simple 'building blocks' (static objects, not affected by gravity) to ‘love missiles’ (turning sprites they hit pink) and ‘mutate’ (merging the pixels of two sprites). And more!

In case you haven’t heard, Box2D is a an easy to use physics engine that is pretty much the default now for 2D simulation in games. It’s what Angry Birds and Limbo are built with, and now DIY City 0.01a.

To use Box2D with node I used the box2dweb port, which is far more up to date (and easier to import) than box2djs. A word of warning though - you’ll be searching the source as there's not much useful documentation.

The simulation for DIY City 0.01a is made up of multiple box2d worlds. Each world usually maps to one surface, e.g. the floor would have one box2d world, and there would be another box2d world for the left wall. Each box2d world would then contain the sprites that were added to that surface.

Having separate worlds for a surface helps when we need the behaviours to be different for the surface as a whole. For example on the floor we wouldn't want normal gravity to affect the sprites as their is no 'down' - it's assumed the floor would be a flat surface. To spice things up a bit gravity is the same as on Mars (which makes the sprite movement more 'floaty') and rotated the gravity vector 360deg every 10 minutes to keep the sprites moving. Other surfaces could be set up to have more friction and more negative gravity.

Projecting the sprites

Two aspects of the project make it important to have a real-time (or just consistent) transfer of information between the clients, simulation and projectors.

Firstly, to ensure the projectors receive the correct data at the same time. Meaning that if objects from two different projections are overlapping that any difference between the two is negligible. And secondly, people could move the sprites on their device (most likely a phone) and see it move into the correct position on the wall. As it's possible for projectors to project a different part of each surface individually (e.g. one projector projects the left half, another projects the right half) we needed to make sure that you didn't notice sprites moving across the boundary between the two projectors.

In case you’ve been living under a rock: there’s been a lot of buzz about real-time updates to web apps using event driven frameworks like nodejs . Nowjs makes the real-time element more simple by exposing the same objects and methods on the front and backend, essentially allowing them to behave like one program. The most useful feature of nowjs is groups which were very quick to set up. Groups are essentially a list of clients that will receive any messages sent to the group. In our case, we had a group for each box2d world, which would then send out via the ‘updateCanvas’ method on each projector. The sprite positions were only sent for sprites that were active or needed to be removed from the projection.

Nowjs also lent itself well to quick configuration, which was really helpful on the night, allowing anyone connected to the projector to make quick changes to the simulations and ‘shake things up a bit’. Using ‘everyone’ here exposed the methods to any projector client connected.

everyone.now.updateGravity = function(surfaceId, x, y)

{

worlds[surfaceId].world.setGravity(new b2Vec2(x, y));

}

Configuring backgrounds

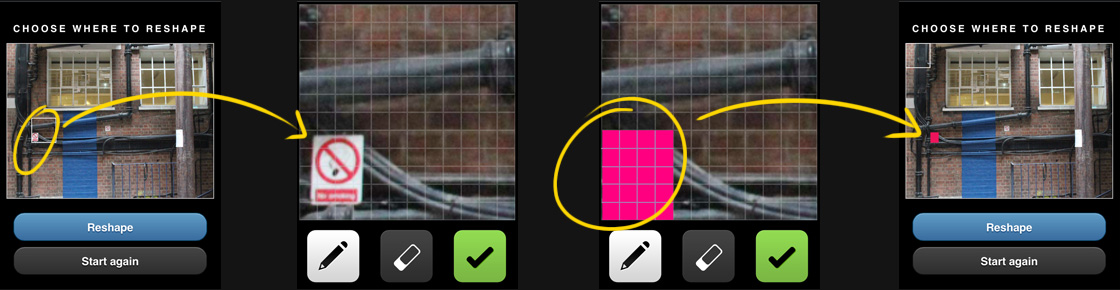

‘Painting’ - drawing on the surface without adding any fancy behaviours - is made more involving by having the actual pixel data from that area in the drawing area on the client, both the pixels that other users had drawn and the actual wall surface behind. This obviously presented the problem of lining up the projectors with the surfaces they were projecting on so that the client could, say, draw red over all the no smoking signs (a popular choice).

We took photos of the surfaces with some big yellow markers to show the boundaries and perspective of the projection. One with the markers and then one without. It's then quite simple in photoshop to chop out the area that the projection would cover. It took no more than 30 minutes on the night.

Obviously these didn’t match up exactly when we projected them onto the surfaces. We had projectors up and running very quickly for experimenting and it showed that moving the projectors or adjusting the settings to get this match wouldn’t be very efficient. Pascal , our esteemed Technical Director, had the idea of setting up the projector views on a 3D plane allowing us to change position, rotation, and so on, very easily for each projector with some simple dat.GUI controls. This also meant we didn’t have to change the surface perspectives of the photos in photoshop.

Afterwards

Looking back, we achieved a lot in those 10 days (and they seem so long ago after a month back on projects). The rapid iterations and getting a working prototype so early really improved the quality of the result by allowing us to get feedback from anyone wandering past. Suggestions came in from all sides giving us ideas for many of the behaviours, the number of pixels affecting the weight, complex hitboxes and the perfect sprite size (we went from 8x8 to 12x12).

After seeing talks by Mortiz Stefaner at FutureEverything and Carlos Ulloa at LFPUG, I’d been told a lot that the best way to maintain creativity is through lots of prototyping and iteration. I didn’t believe them. Process all the way in the real world I thought. Now I’m definitely sold, though working on a different project with a different client could be very difficult.

The most rewarding aspect was watching everyone using the application and seeing the courtyard morph and evolve throughout the night. The excitement of seeing your 12x12 pixel cat flying around a wall was far greater than we imagined. With the interest and buzz around the night we gathered a lot of interesting feedback (sound! Kinect! temperature sensors! pressure pads!) and had some great discussions on how to take DIY City further. You may even be able to reconfigure your own city soon! Keep an eye out.

One last piece of advice: your friend’s Netgear router isn’t going to last long with 40 people connected to it.

---

Gavin is Head of Flash at Specialmoves, but he’s not an idiot* @villaaston

Follow us on Twitter @specialmoves

*this is a Specialmoves in-joke which Gavin will surely be happy to explain if you ask him